Following our last summer intern’s footsteps, Jesse Kosunen, a fresh XR Design graduate from Metropolia UAS, finalized the PedaXR – Presentation Simulation during their internship at Helsinki XR Center this spring. Now they share with us what their job with the PedaXR project included and what they learned during the process!

Text and pictures below by Jesse Kosunen.

Hi, I am Jesse, just recently graduated XR Designer from Metropolia UAS XR Design degree programme. I have now worked at Helsinki XR Center almost six months as an intern and my main focus has been developing VR (Virtual Reality) applications as part of a small developer team. As an XR Designer, I like to make sure that the applications look good, run smoothly and are fun to use!

I joined the PedaXR – Presentation Simulation -project during its development in January 2023. It is a VR simulation where the user can practice giving a presentation in front of a virtual audience. At this point, the project’s 3D assets, environments and animations were mostly done, but it was still in the early stages of functionality and game mechanics.

I was responsible for the design and development of the application’s functionalities, including VR interactions, game mechanics, sound effects and behavior patterns of the virtual audience. In addition, I paid a lot of attention to the performance of the application and especially to the user experience, which was greatly helped by feedback from test users.

VR is the foundation

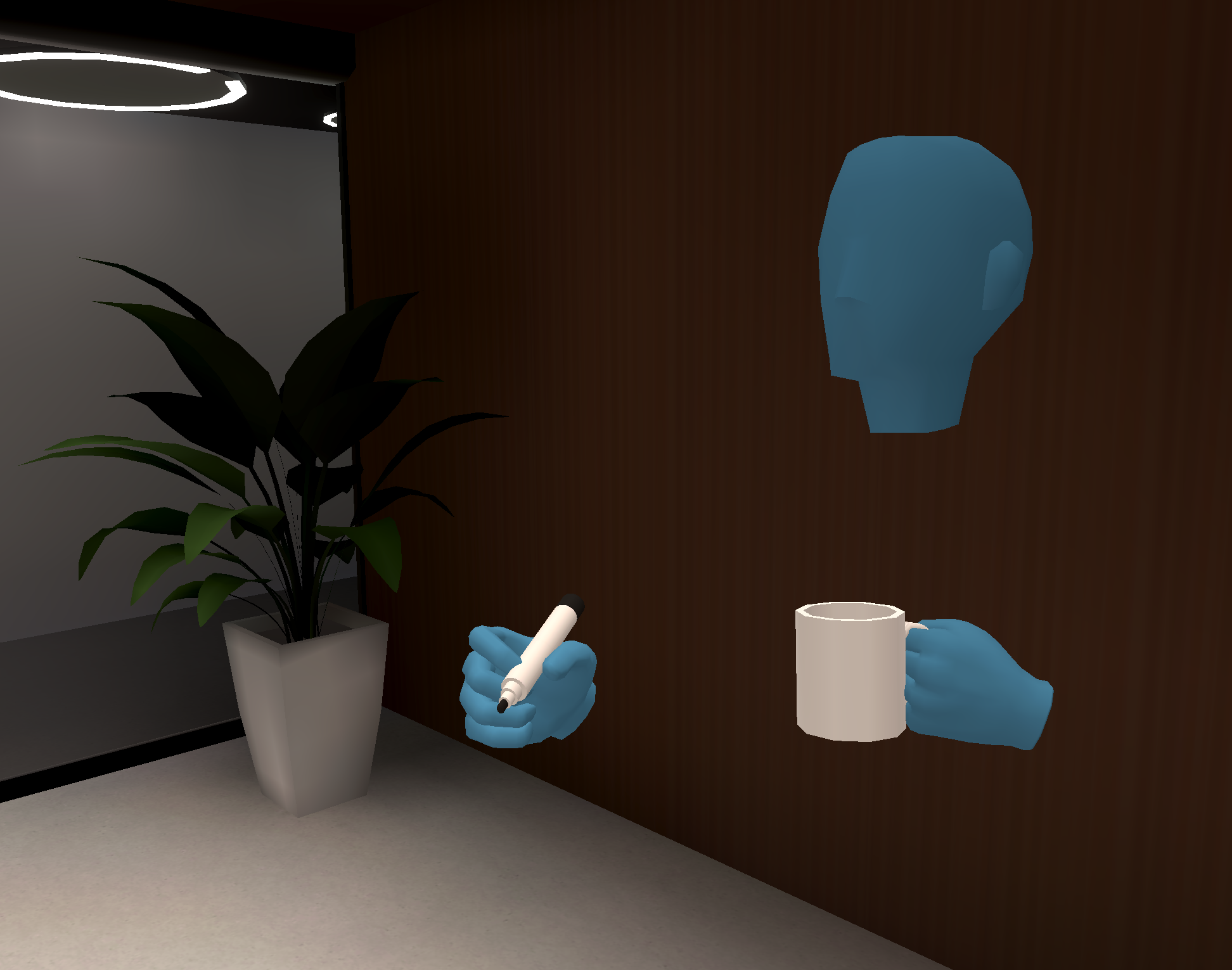

When I started working on this project I first looked at its VR features. Ideally, movement and hand interactions would be natural and intuitive for the user. If this is not the case, they can easily get frustrated.

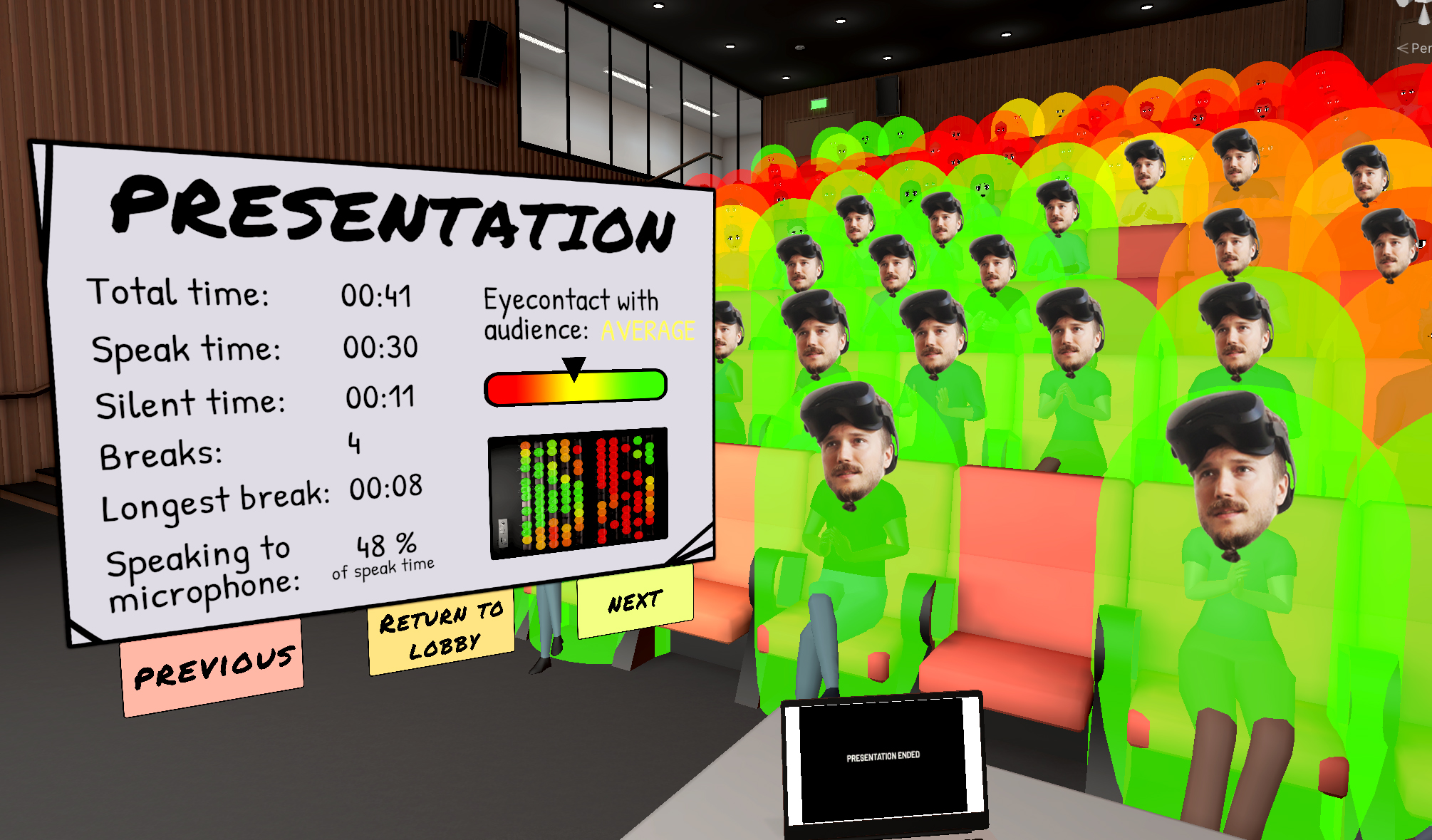

I paid special attention to the interactions between player and different kinds of objects because they are common in VR applications. For example, I made all the objects within the player’s reach movable and added indicators to make them easier to grab. I also adjusted the player’s grabbing mechanics to be more sensitive and made sure that the objects are placed correctly in the hand. In addition, I prevented the player from “bumping” into the objects he was holding. That’s because we noticed that the player easily flew to the sky by the force of the grabbed object, especially if the player was standing on it during grabbing.

We wanted the user to be able to explore the game environment only by physically moving in the space. This reduces the risk of VR motion sickness and makes the app more reachable to inexperienced players. That’s why there was no need to pay attention to separate movement mechanics such as teleportation or smooth locomotion.

Have a cup of coffee and write something on the whiteboard.

Have a cup of coffee and write something on the whiteboard.

Simple mechanics create fun experiences

When the VR side was in relatively good shape, it was time to start planning and implementing other functionalities to the application. We wanted the app to be as simple as possible to use and easy to learn. In this way, the user could focus on the most important thing – practicing his own presentation.

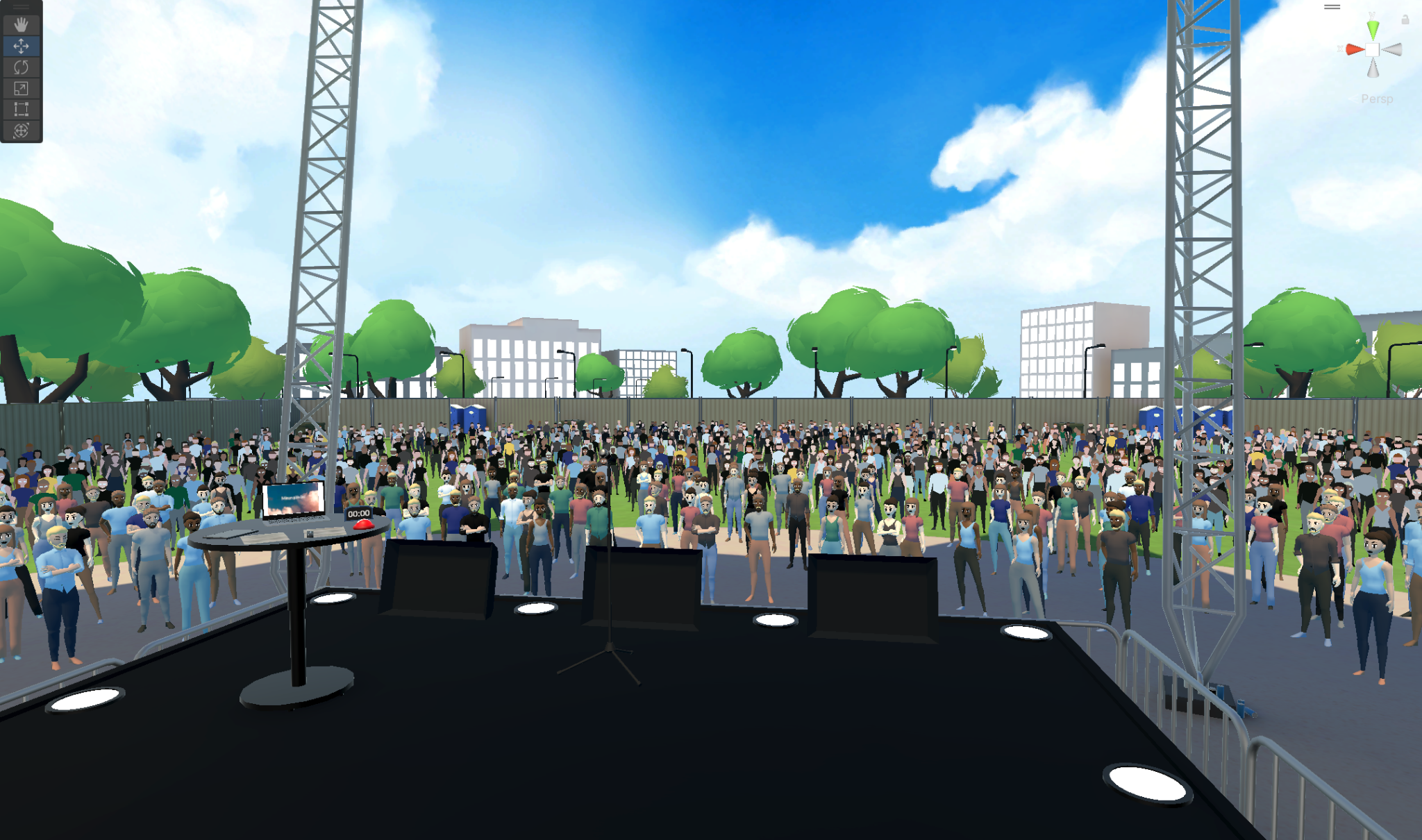

Our 3D artists had built four different presentation spaces for the application. The spaces are of different sizes and each can accommodate a different number of audience. The user’s “home space” is a dim back room, where they can choose the presentation scene they want to go next.

Lots of people in the Stage Scene…

…and not so much in the Meeting Room.

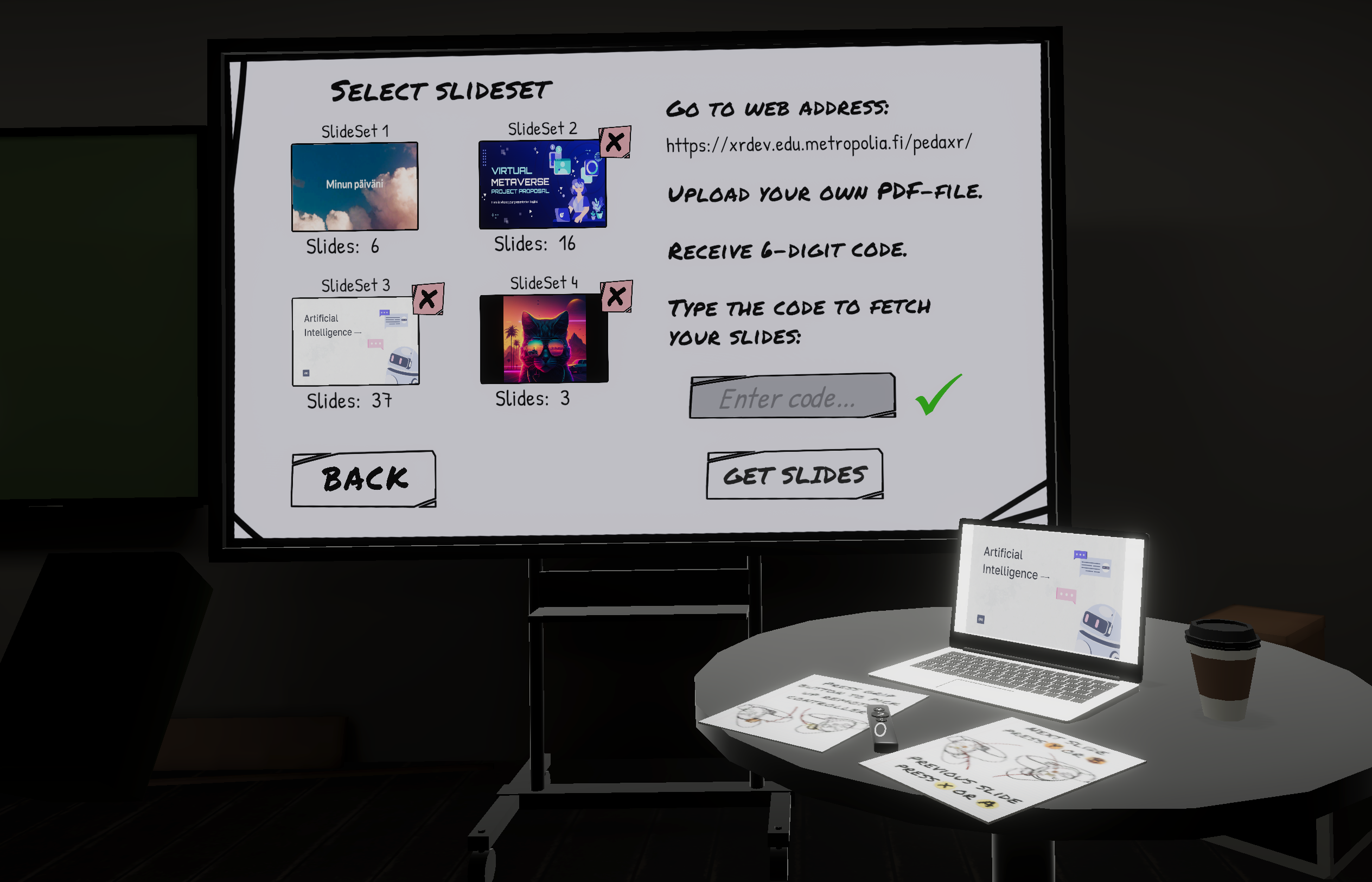

In the back room, we gave the user an opportunity to upload their own slideshow to the application. It is done by uploading a presentation in PDF file format to a specific website after which you get a download code for your presentation. When you enter the code to a designated place in the application the slideshow is downloaded. Then you can just select it and test view it from the virtual laptop next to you. Slides can be changed forward and backward with the virtual remote control found on the table.

Download your own presentations and practice with them.

In the presentation scenes, the user can practice giving a presentation in front of an audience. The presentation starts by pressing the START button and ends when all the slides have gone through or the player himself interrupts it by slapping his hand on the big STOP button on the table. During the presentation, the player can see the time spent from a timer on the table and their slides on the computer in front of him. There is also a larger screen or screens intended for the audience to follow the presentation.

Everything is ready for the presentation to start.

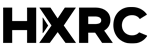

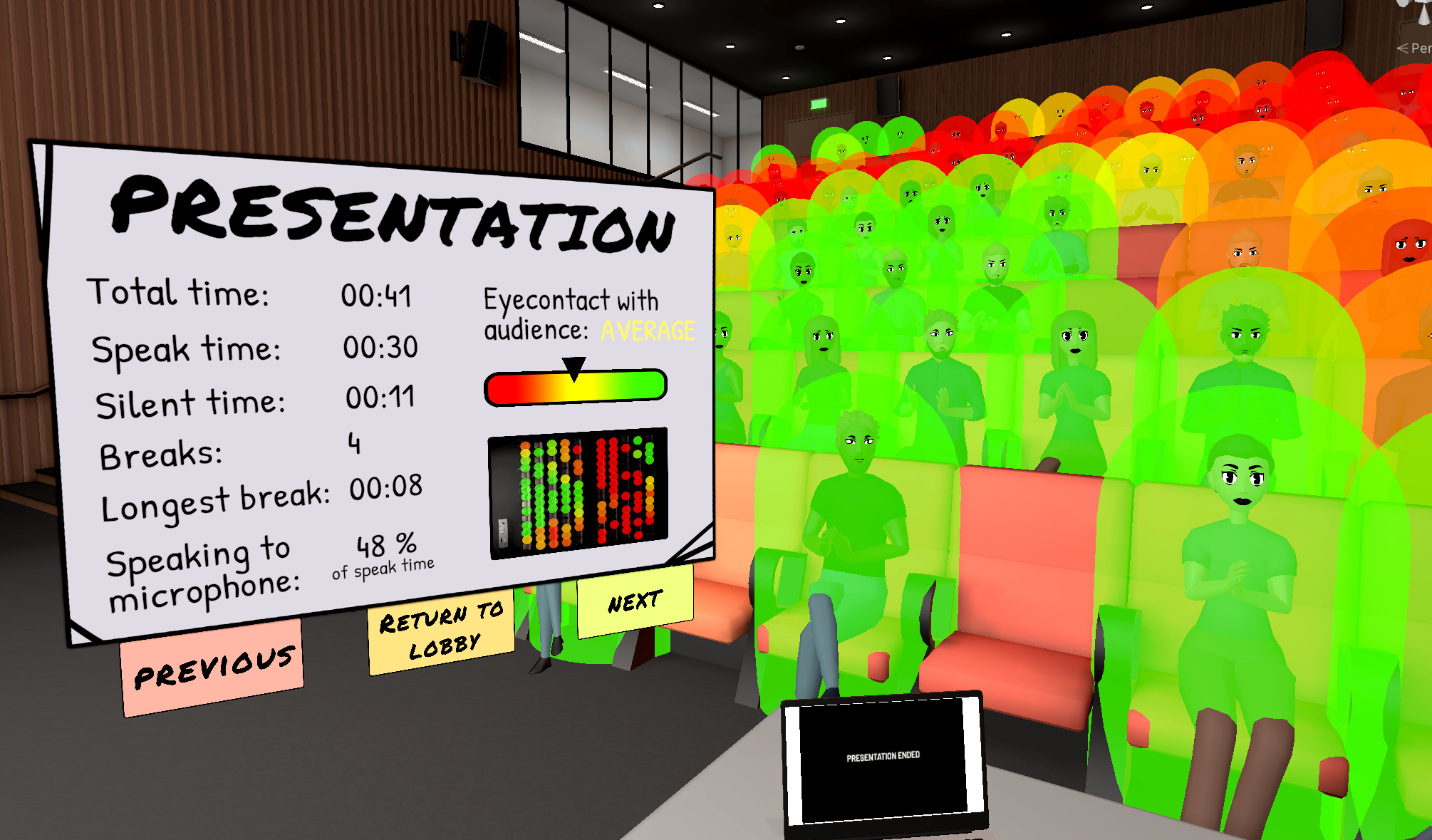

After the presentation, the user is given a final report on how it went. The report includes the total length of the presentation, speaking time, number of breaks and how much time was spent on each slide. The player is also told how well they kept eye contact with the audience during the performance. This is indicated by a color scale from green to red for each viewer. After the presentation, the player can either try again or return to the lobby and select another scene and slideset.

User gets to see data on how their presentation went.

AI (Audience Interactions)

We wanted the audience to react to the player and give some audiovisual feedback on the course of the presentation. The spectators change their color gradually to gray if the player hasn’t looked in their direction for a while. They also change their behavior depending how well the presentation is going. Our 3D artists had created several animations for different emotional states the audience members can have. These range from boredom to anger and cheering to laughing.

I wanted each member of the audience to be an individual, so I had each character have different parameters on how they react to the player’s actions. For example, some of them may get bored more easily or even start to boo if the player takes too long breaks and doesn’t keep the presentation going. Others may give encouraging gestures or cheer during the final applause if the performance goes really well. Some look at the player intensely while they are presenting and others think about their own things. Audience also reacts if the player speaks too quietly or holds the virtual microphone too far away.

People can get bored and they will show it. They will also gray out if you don’t keep regular eye contact.

If you see the audience booing, you know you have done something wrong.

Sounds, not silence

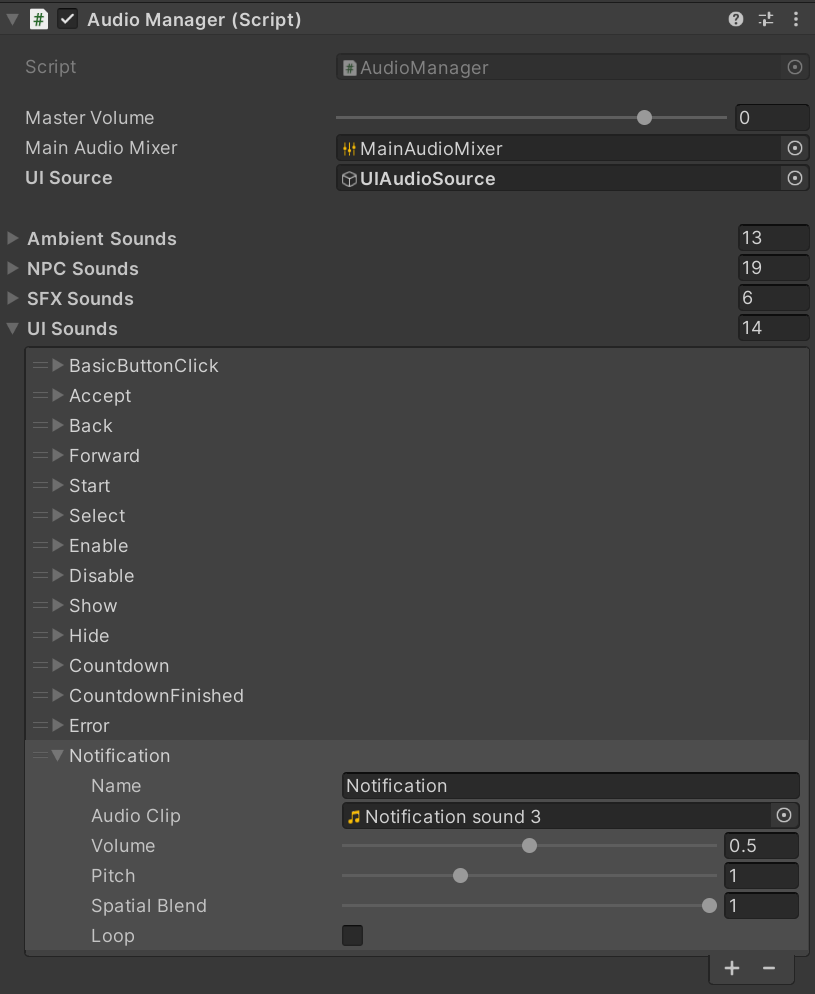

Sounds are easily overlooked in the early stages of app development, even though they are an important part of the whole. For this application I built a separate Audio Manager through which the sounds are organized and played centrally. This makes it much easier to change and add sound effects or music along the way.

Sounds are important not only for creating the atmosphere (e.g. relaxing music from the back room radio, audience chatter echoing in the space before the presentation starts and resounding applause at the end), but also for giving feedback. The sounds help the player understand when the user interface window pops up, whether the button registered a press or the slide show was successfully loaded. In VR applications, sounds help to tie the whole scene together, especially when they are spatial, i.e. they sound like they are coming from a certain location in the environment.

Sounds are neatly in order and easy to change.

Testing makes perfect

During the development phase, the application and its functions may seem simple and clear to the developers themself, but the truth is only revealed when others try it. Especially those who have no prior experience of using VR headsets.

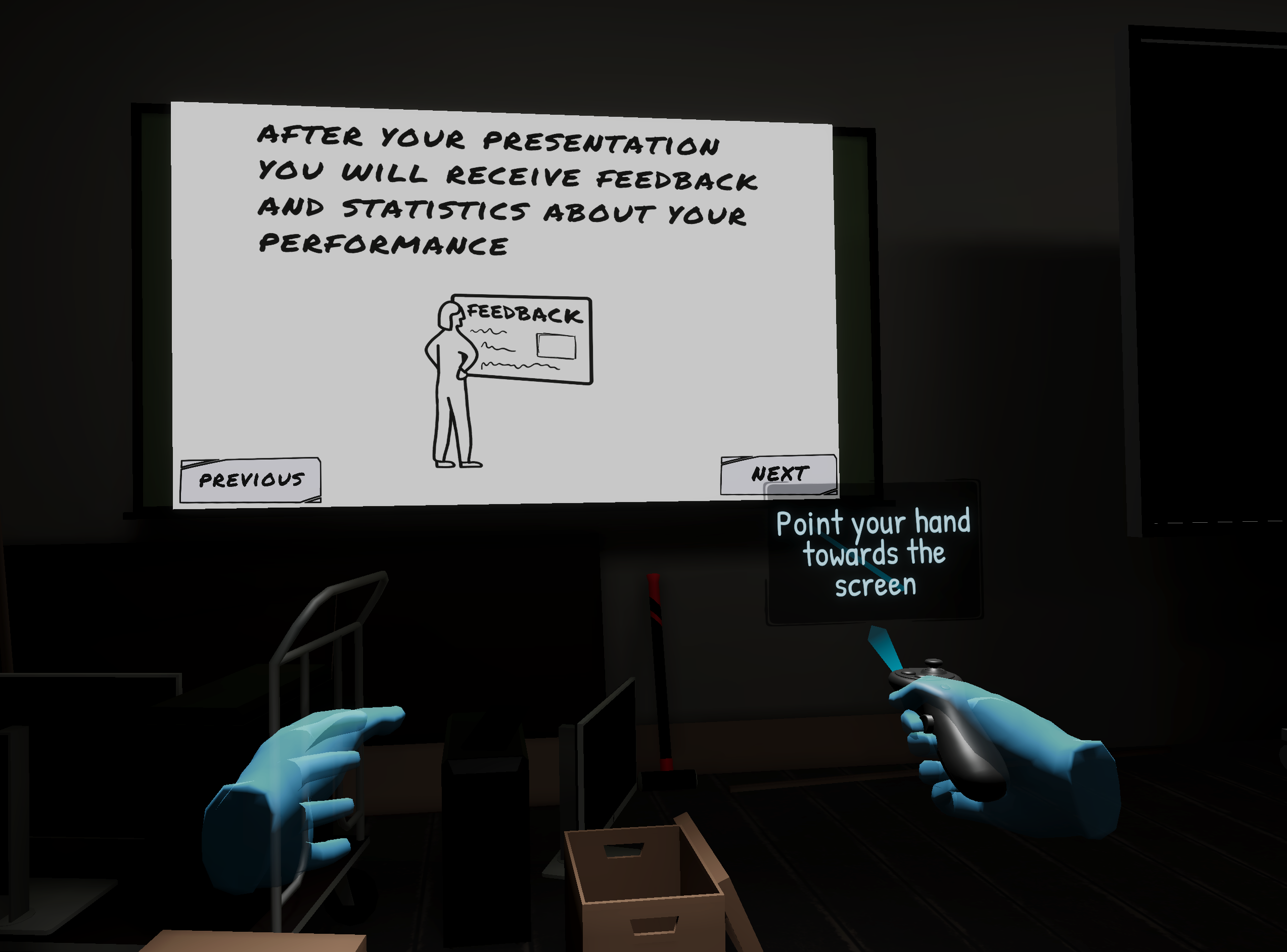

Already in the first tests, it became clear that the application needs some kind of instructions so that the players know how to use it. For this we designed a separate scene for the start, where players could freely browse and read through a description of how the application works and which buttons are used for what. However, in the following test we noticed two problems:

- The players didn’t have patience to read the instructions carefully, but scrolled through them quickly and were then completely lost when they got to the presentation stage.

- The most inexperienced VR users needed help to even proceed to the next page when reading the instructions.

After this we realized that you can’t force players to read, but you can force them to do. I made a small mandatory tutorial, where the player is instructed to take a virtual remote control from the table and use it to change slides on the computer screen. I even put a bright floating arrow above the remote control, so that it will not be missed. In this way, the player inevitably learns the mechanics necessary for the application before being allowed to go further. I also added arrows for all the important things in each presentation scene and prevented the player from starting the presentation before they had picked up the virtual remote control.

To solve the latter problem, I put a virtual VR controller in the player’s hand, which teaches them how they can proceed to the next page of instructions. In following tests, we found that the users now knew how to use the application significantly better without external help.

Written instructions can be helpful but you can’t be sure that everyone reads them.

You have to be clear on what the user needs to do when they are playing for the first time.

Final words

Overall this was a very interesting project and I learned a lot while working on it. It was especially nice to have feedback from test users and see how the application evolved based on them. There was a lot to do in a relatively short time so it was quite a challenge to keep everything nice and organized throughout the project. That’s definitely something I’ll keep focusing on in future projects also!

To see previous news about our trainees’ projects, head over to the Trainee news section.

Follow us on social media for more posts: Facebook | LinkedIn | Twitter | Instagram