This time we will dive into process of developing a virtual rehabilitation software, Vauhtia, led by our HXRC trainees Miro and Julia. We’ll start with Julia’s thoughts and then we’ll give the mic to Miro.

case studies Text & images by Julia Hautanen

Hi, I’m Julia Hautanen and I study 3D animation and visualisation at Metropolia University of Applied Sciences. In the spring of 2023, I did my internship at Helsinki XR Center in the role of a 3D artist.

We started a new project in a team of 4 people. The project working name was Vauhtia, and it aimed to speed up the accessibility of virtual technologies based on artificial intelligence in rehabilitation through online collaboration. The team included two coders, Miro Norring and Leo Virolainen, and two 3D artists, myself and Suvi Pietiläinen.

The purpose of the project was to implement educational situations in a VR environment, where a student helps to rehabilitate a certain function for a person at home with various aid tools, with the support and supervision of other students and the teacher. The case studies included various rehabilitation functions such as how to brush your teeth and how to get dressed. Also different variations of patient ailments, for example memory loss and hand injury. As the project progressed and the time was limited, we thought it was best to limit the amount of tasks and ended up developing a situation in which the student teaches a person suffering from memory loss how to make coffee.

Starting the project!

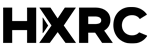

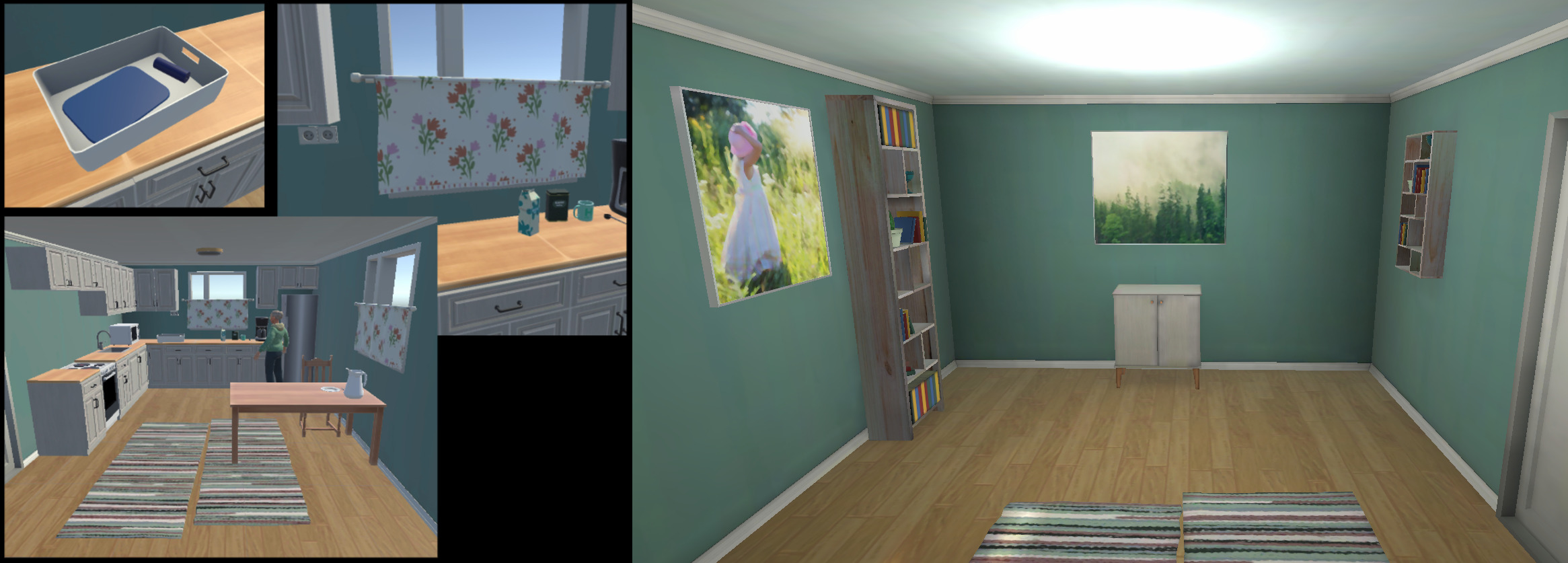

At the start of the project, Suvi and I set out to investigate what 3D models are essential. We studied the Vauhtia project as a whole, taking into account all the desired scenarios. In the early stages, we noticed that we were talking about Grandma, who is being rehabilitated, so we chose to design a traditional Finnish home. We made the home two-story high, because one of the planned teaching situations was about going up the stairs. We soon realized that making the entire house was quite extensive. This confirmed our suspicion that focusing only on the kitchen scenarios would make sense if we wanted to get the application in presentable condition by the deadline. Below is a picture of the house plan and the proposed fixed elements for the rooms that would be suitable for modeling.

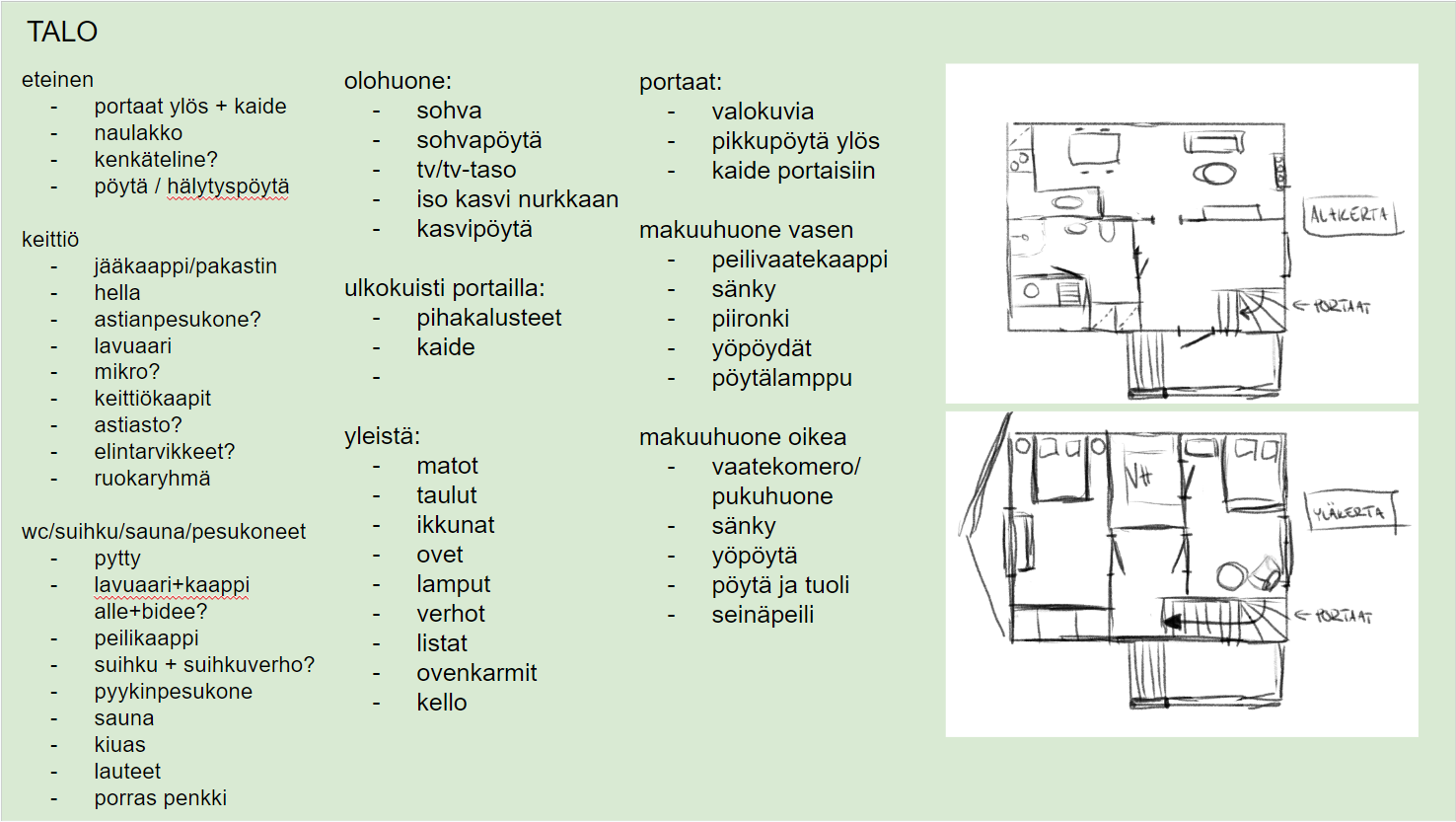

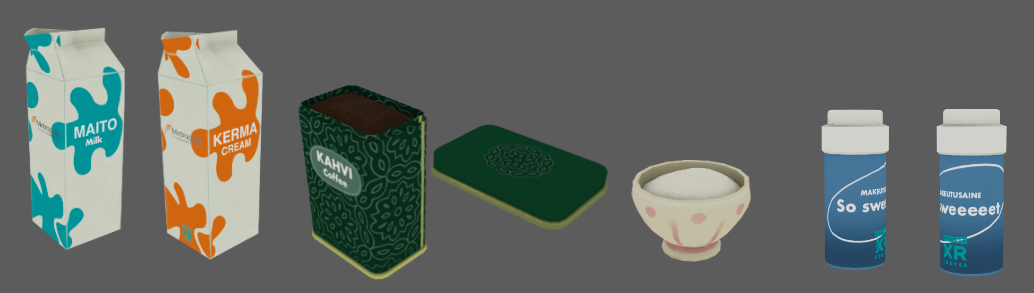

Since those who are being rehabilitated are also taught to use various aids, we had to get accurate information about the aid tools that they use. For this we visited Myllypuro campus, where social- and healthcare skills are taught. We were shown the tools and how to use them, and took reference pictures and measurements. Non-slip and thickening aids were needed for the coffee making scenario.

In order to keep the style consistent between different artists, we decided the style that we are going to use in the project at the very beginning. A comprehensive mood board is a good tool for this. The coders informed us that the project uses Ready Player Me to create the characters and rigs ( https://readyplayer.me/ ). It defined the style for the application. The style should be slightly stylized and not so realistic.

The 3D part of the object

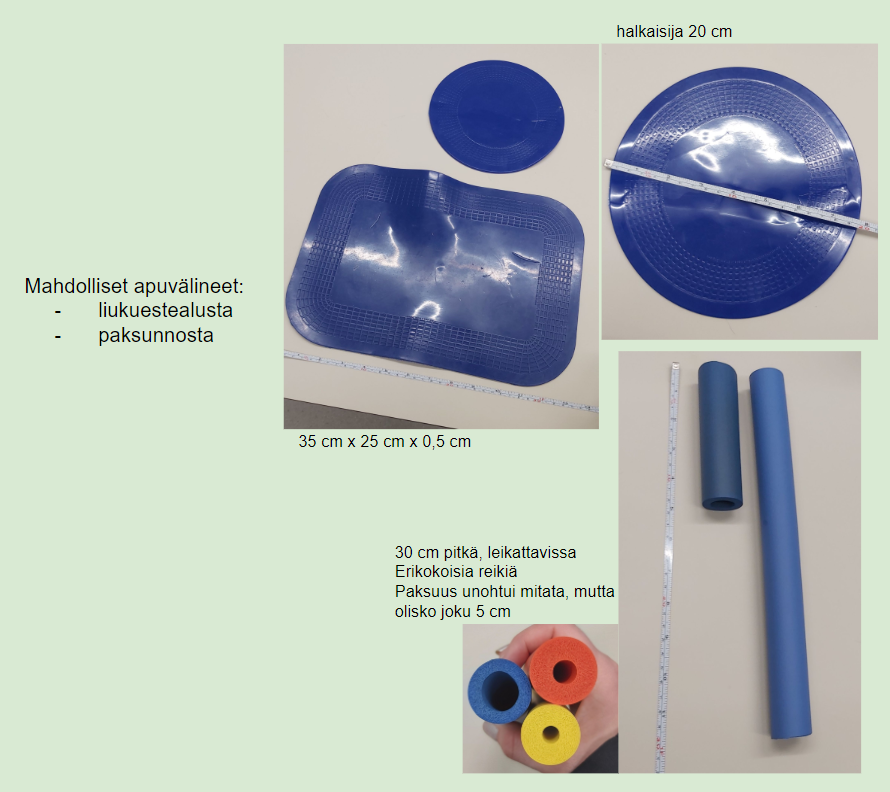

We used the Autodesk Maya program for modeling. First, I blocked out how the whole house could look if the project was done completely. We were planning that a stairwell would act as a menu for different game scenarios, and by choosing a door, you could play different situations. At this point, we also shared the tasks with Suvi. She would model the kitchen appliances, cabinets, dining group and the coffee maker. I took on the task to model the room, character, models needed for making coffee, aid tools and I also did the animations and the voice acting plus foleys. After blocking the whole house, I modeled the kitchen room and implemented it to Unity so that Suvi could start fitting the furniture.

After that, I set out to implement a character with memory problems, known as Grandma, in order to get it to the coders as quickly as possible so that they could create the required interaction with the character in the application.

I created a character in Ready Player Me that I thought would be easy to modify to look like an older female character. The character was meant to give more of a cute grandma vibe. Using the Blender program I edited the character to be shorter and sturdy, and also lowered the height of the bun. In terms of clothing, I changed the sneakers to slippers, modified the hoodie and added glasses. After that I edited the textures. I modified the hoodie into a more homely granny shirt with flowers, and the slippers in a matching color. I also edited the skin texture, added wrinkles, liver spots and blood vessels.

After that, I checked the rig that came from Ready Player Me and added FK/IK switches to make the animation easier. When the rig was ready, we discussed with Miro what animations the character needed and I made the blocking in Blender and transferred the animations to Unity. If the project is continued, the animations can be refined further.

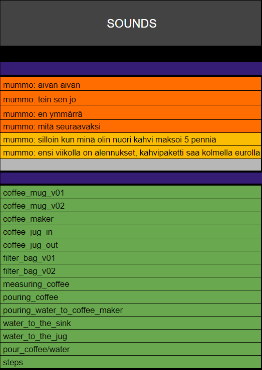

Voices were also needed to enliven the entire game. When you talk to Grandma, she answers, or if the instructions are not clear, Grandma says that she didn’t understand. I designed voice lines suitable for different scenarios and recorded and edited them using the Audacity program. At the same time, I also implemented foley sounds for the coffee making.

When the character was ready, I moved on to making the kitchen assets. I modeled tools used for making coffee. The student instructs the character to use the necessary aids so Miro and I agreed that the aids that can be interacted with should be in a separate box from other grandmother’s items. To make the VR experience more pleasant, I also decorated the rest of the kitchen so that the room doesn’t seem empty. To save time and increase efficiency, I used props made for different projects, such as shelves made by Sara Kauppinen and a cabinet made by Suvi for Kotikulma-project, and implemented them in this scenario.

For future further development, I designed the tools needed for the next teaching scenario. It was easiest to continue in the environment of the already made kitchen, so I modeled the items needed for re-teaching how to make porridge. I found ready-made models from The Base Mesh ( https://thebasemesh.com/ ), and modified and textured them to suit our needs. When the kitchen was finished, Suvi started to model the bathroom for the next scenario. I modeled a wall-mounted shower seat with height-adjustable armrests that is needed in the bathroom scenario. The time for this project ended, so we didn’t finish the bathroom, but it’s waiting if the project continues. Suvi used the shower seat aid in her next project, Kotikulma.

Final words

It was very interesting to be able to take part in the project from the beginning. I learned more about version control, visualizing the whole project, scheduling and project management. It was interesting to be involved in the VR implementation where you can control NPC with voice and to hear me acting as the grandmother 😀 We had a nice team and I think the end result was good compared to the time we were given. Towards new projects! Bestness!

…And now give the mic to Miro!

Text & images by Miro Norring

Hello, my name is Miro and I was a part of the team responsible for developing the virtual rehabilitation software in Helsinki XR Center starting from January this year. Our goal was to create a simulation where one uses speech to guide an elderly person or someone suffering from mild cognitive impairment.

I specialize in game software development and in this project I had the responsibility of developing the logic and functionalities for the non-player-character (NPC), integrating the outer application that manages speech recognition in our software and train it, and I also programmed the steps in the simulation along with the supporting systems responsible for tracking the progress.

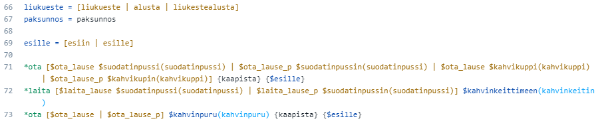

The first simulated scenario was “Brewing coffee”. The user has to guide the NPC to work independently through speech only. I used a speech recognition application called Speechly to listen to the user’s speech. The application listened to the sentences and filtered them based on context and key words. Then the NPC tried to act upon the sentence if it was valid. The outer speech recognition application was not yet perfected to listen to Finnish speech, so the user had to articulate well and clearly.

Speechly

We used a Finnish speech recognition software named Speechly during our development. The software’s prototype option for detecting Finnish language made it possible to develop our whole project in Finnish. Speechly offers a specialized, trainable application for the chosen language, in other words you get a Speechly instance to use in your own software.

The instance is trained using Speechly’s own programming syntax. The application is trained by writing examples of possible spoken sentences that consist of keywords and variables such as optional words or synonyms. Following that, the sentences are marked with a context word so it can be easily summarized and used in the actual software. All of this made it possible to have different layers in applying the recognized words or sentences in our actual own software

I then integrated the Speechly instance into our software and programmed the NPC to listen and behave according to the user’s instructions. It would follow commands such as “pick up milk” and “fill the jug with water”, and it would also inform the user if they said something it did not understand, or something that it couldn’t do based on the circumstance (cannot turn the coffee machine on without plugging the power cord in first for example). There was a degree of accuracy that was also needed in the commands, which was added intentionally. If the user was too vague with the commands, something could go wrong such as spilling water everywhere or leaving a step undone which would leave the scenario to be incomplete.

NPC

Since the idea was to simulate an old person or someone who had trouble functioning on their own, our 3D-artist Julia created an older woman NPC (Non-Player Character) for the software, who we affectionately called “Granny” (“Mummo”) during the development. The user would instruct Granny on how to brew coffee by herself, and she reacted to the commands either negatively or positively, or not at all if the command was invalid. If she was left on her own devices for too long, she’d get distracted and start to wander around and do her own stuff. She also had the capability to already know how to execute a step or two of the process. This would trigger randomly during the start of the simulation, and the user could ask Granny if she knew what to do next. I created a scenario tracker to work in the background which would allow or deny Granny certain interactions based on previously completed steps. In whole, this AI logic was a collaboration of a few things: the speech recognition script which translated the user’s commands into applicable actions for the NPC, the scenario tracker which handled the completed steps and their proper order, and lastly the AI script which perused data from the other scripts to control the NPC’s actions accordingly.

Outro

Even though our schedule was tight and we had to make some compromises regarding the direction of the software, I had fun and the team was great. I tackled a lot of unfamiliar concepts but I am satisfied with the outcome. As an experience this project taught me a lot, and I made it a point for myself to use unfamiliar methods during the development which also turned out to be fun and educational.

To see previous news about our trainees’ projects, head over to the Trainee news section.

Follow us on social media for more posts: Facebook | LinkedIn | Twitter | Instagram