Join the visit of our Technology Expert Santeri Saarinen to Barcelona! Here he recounts the visually rich details of the event and his impressions of the technology exhibits, as well as his personal experiences on the trip! The article consists of four parts, read on to find out about Day 2!

You can also find the highlights for Day 1, Day 3 and Day 4!

Text and images by Santeri Saarinen

Day 2 – Personal Tech, Healthcare and Otherwise

As my trip was partially funded by STAI (Simulation, Testbed and AI services for healthcare focused companies) project, a national counterpart to European TEF-Health project, I was very interested in participating in the Digital Health programme, which was running throughout MWC. One of the most interesting parts of that programme was the panel discussion on Enabling Remote Healthcare.

Gilles Lunzenfichter, CEO of Medisante, started the morning with an excellent note. “We need to collect vital data from the elderly at home. What makes this difficult is that the most vulnerable, who need the most telemonitoring, are often not used to digital tools at all”. This should be the starting point for all telemonitoring. The patient does not need to know how the device works, how to do maintenance or how to send data to the doctor. This should all be handled automatically.

Alicia Rami from Fortrea highlighted that while the patient is at home, “everything” should be collected. How much technology is required for “everything”? Patients don’t understand the technology, which causes them additional stress. “Why is my device beeping in the middle of the night? What does it mean? Am I in trouble?” We should provide the user with feedback that they can understand, and make everything else invisible to them.

Panel discussion, Enabling Remote Healthcare

Edouard Gasser, CEO of Tilak Healthcare continued on this note, building on his background in gaming. “The patients need to enjoy monitoring themselves at home.” We should develop content that is suitable for the target group. That is the easy part. People play games, getting them to play a new one is not a problem. Problem is figuring out how you make the doctor to prescribe playing a game. It is extremely important to build trust with the doctors, and to help them understand how the system is beneficial to their patient.

It is extremely important to build trust with the doctors, and to help them understand how the system is beneficial to their patient.

Lunzenfichter also threw a great challenge to European healthcare. We are five years behind United States. The US implemented reimbursement policies for remote health monitoring in 2019. EU is doing it now. So we have a gap to catch up. Gasser added on to this with another challenge, integration. The various healthcare systems in place in Europe are so varied and all over the place, that the integration to this ecosystem is very difficult.

There was a clear focus on lightweight AR glasses, as several companies brought forth their new devices. Some of them available for testing, some just to be gawked at through a glass. But all of them interesting. If this form factor becomes the norm, we might see people wear them on the street one day.

NTT Qonoq Devices showcased a prototype of their upcoming Snapdragon AR2 based XR glasses, which could be released later this year. At this point the device was only shown through video examples, so the actual level of content is to be decided.

We are five years behind United States. The US implemented reimbursement policies for remote health monitoring in 2019. EU is doing it now. So we have a gap to catch up.

RayNeo exhibited their RayNeo X2 glasses, which were originally funded through Indiegogo campaign. The subsidiary of TCL Electronics showcased glasses which might become the bar for high-end AR content. The quality, controllability and voice operations were very good. The only downside being that the device was still quite bulky and the “cool-factor” was fairly low, reducing the willingness to wear it in public. This was mainly because of the large size of the lenses. The glasses are still available on Indiegogo for a couple days, with the expected delivery starting later in the Spring this year.

Another prototype available for testing was OPPO’s Air Glass 3. The form factor was extremely light and easy to wear, while offering bright colors and good visibility in the content. For a low immersion, additive AR content channel, this device would be excellent for daily use. The clarity of the display was thanks to their self-developed new waveguide lenses, which offered great brightness. The device also offered the possibility for touch controls and voice interaction. OPPO is also collaborating with Qualcomm and AlpsenTek to introduce more AI capabilities in their devices, which could provide interesting opportunities. It is no wonder they won the “Best of MWC 2024” recognition in the event. This is definitely a device/company to keep an eye on.

NTT Qonoq Devices XR glass prototype

OPPO Air Glass 3 prototype

Another interesting company at the show was OPPO’s collaborator Mojie. A hardware developer leading the research globally on resin diffractive waveguide and light engine technology. They had several models in their booth to take a look at, showcasing their collaboration with OPPO, ZTE and Xingji Meizu. Being from Finland makes us extra interested in this, as we have our own Dispelix working in the sector, so it’s good to stay on a lookout for global competitors.

Panel discussion continued with a look into the industrial metaverse, and how it has risen to be reality while the consumer side is still trying to find it feet. Ingrid Cotoros from Meta described the industrial metaverse as a natural evolution of the consumer metaverse. But while it offers great benefits for many sectors, it also leads to radical change in working habits and people daily routines, which might cause pushback. In the end though metaverse solutions allow companies to find issues and solutions faster leading to more sustainable industry. Soma Velayutham from Nvidia agreed but reminded everyone about the key difference between simulation and digital twins. A digital twin needs to be synced with reality, offering actual data from real world and hopefully in real time. This connection to real world allows people to build and design real things in the metaverse without creating physical waste, while still offering realistic, usable results.

Nokia’s showcase area

Velayutham also brought up an interesting use case utilizing AI. While the metaverse can display a replica of the real world, it can be used like the real world in relation to AI training. We can train AI in the metaverse instead of the physical environment, leading to even more effective and sustainable solutions. For example, a self-driving car could be taught to drive in a virtual city, instead of a test track in the real world, and when the level of content is good enough, the results are transferrable to the real world. Anissa Bellini of Dassault Systems reeled back from AI to real workers and their training. She described how we could utilize AI to predict how the environment reacts to the user based on historical data from the real world. This data could also be utilized to simulate on a larger time-scale, to make training more effective and allow users to see not only the consequences of their actions but also what earlier lead to the situation where they had to make the choice.

Nokia’s Jane Rygaard talked about collaboration in the industry. The metaverse tech stack includes so many different technologies, devices and solutions that no company can build everything on their own. And because of this, we need open collaboration within the industry to bring us the to future. Velayutham agreed and added to this that in order for the collaboration to work, we need open standards for data. Formats like USD for 3D scenes are a great starting point. He also mentioned that 5G networks are capable but currently they haven’t really delivered that much on the promises. However, when we move to the metaverse on a larger scale, having no latency becomes extremely important because we can interact with the physical world and physical devices in real time in critical situations. Rygaard continued that most likely in the future we will end up in a situation where the amounts of traffic increase so much that there will be a clear need to even move from 5G to 6G.

Volumetric video for collaboration by Nokia

It was great to see Nokia represented with one of the largest booths in the event. Though in the end, they clearly concentrated on private meetings and the things on display we’re lacking that wow effect, that many of the other companies with more of a general visitor focus had. However, it was great to see familiar faces as Ville-Veikko Mattila and Emre Aksu showcased their new real-time volumetric capture streaming solutions. Plans are in motion to collaborate with Nokia on this technology further, so hopefully we see more results together in the future.

It was great to see Nokia represented with one of the largest booths in the event … as they showcased their new real-time volumetric capture streaming solutions.

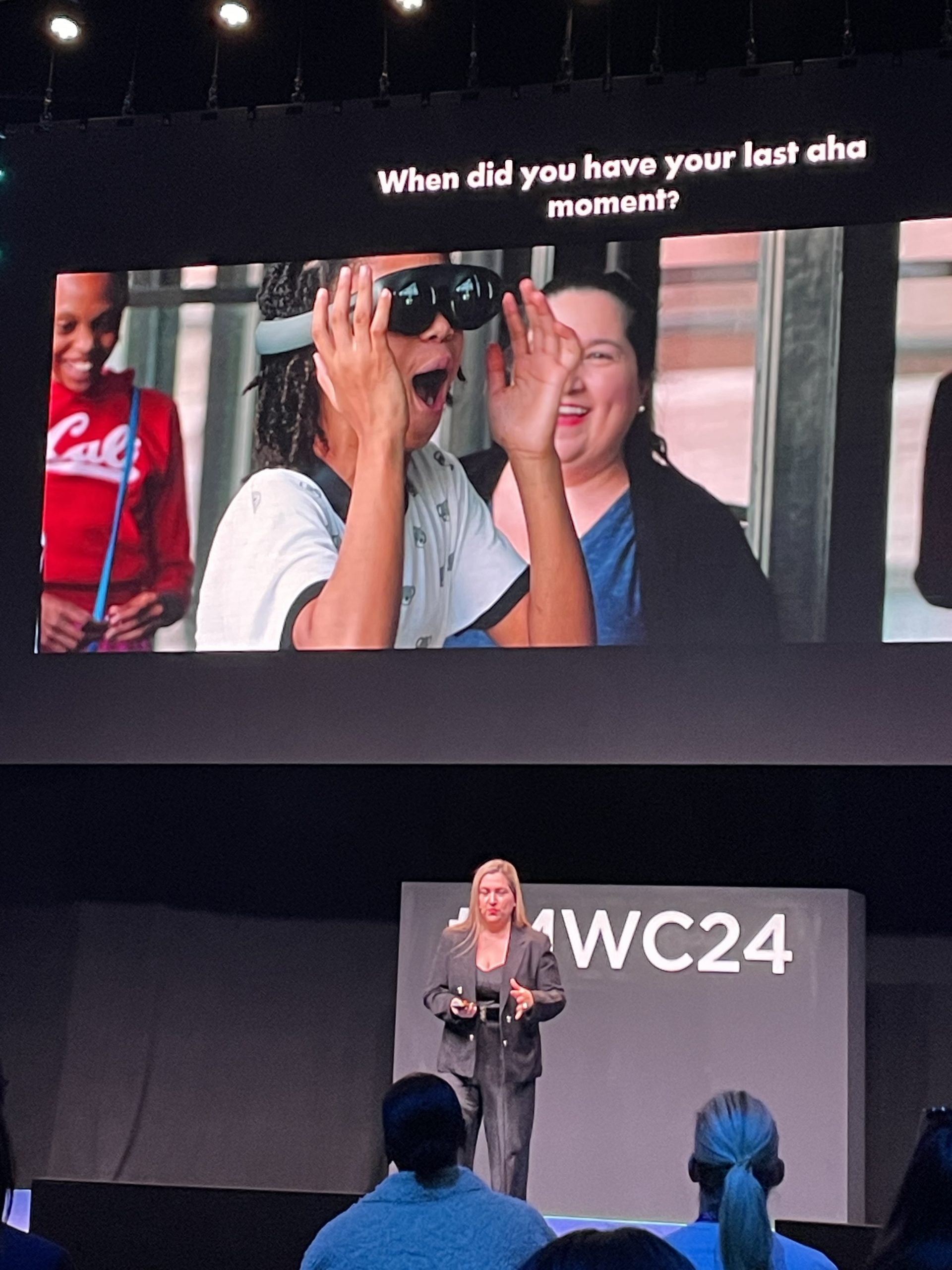

During lunch hour, Cathy Hackl from Spatial Dynamics was discussing the future of AI, spatial computing and personal tech. She pointed out that AI is one the keys to unlocking spatial computing. Related to spatial computing, AI has four main points of advancement in 2024: Vision, Perception, Perspective and Context. She predicted that instead of LLM’s, we’ll start to see more LVM’s or Large Vision Models, which are trained on real time video data from the real world. As people start using glasses everywhere, and their content is more and more anchored and connected to the real world, every surface becomes an interface that we are able to utilize in different ways. Cathy also echoed the words of my friend Wesa Aapro from YLE: “For generation alpha, what happens in the virtual world is as real to them as the real world is to us.” Virtual experiences become more and more important to people, and as such they should be taken seriously by even those who are not that interested in joining in themselves.

Related to spatial computing, AI has four main points of advancement in 2024: Vision, Perception, Perspective and Context. Cathy Hackl from Spatial Dynamics predicted that instead of LLM’s, we’ll start to see more LVM’s or Large Vision Models, which are trained on real time video data from the real world.

One of the most talked about devices at MWC in 2024 was AI Pin by Humane. A tiny device you can wear on you and interact with gestures and voice. Its custom AI-OS automates many tasks and allows for more seamless and effortless computing experience. The wearer is able to make calls, take photos, record video and query the AI. It feels like a more expansive AI smart-speaker. Not sure how I feel about wearing one constantly, but it was an interesting direction to take the tech.

Cathy Hackl describing what it feels to experience something for the first time

Humane’s AI Pins on show at Qualcomm’s booth

NTT Docomo’s Feel Tech demo brought haptics in the picture with their pet dog in virtual reality. The haptic feedback was pretty convincing, whether it was the dog pulling a leash, or me picking up a cube. The level of feedback leaves me positively following the development of their Feel Tech platform, which was announced late last year. The goal of the platform was to share haptic feedback among other senses between users. This would allow users for example, to relive experiences of other people, including the sense of touch they had.

Another interesting piece of haptic equipment was SenseGlove. The gloves, which allow you to add feedback and hand tracking to XR solutions felt easy to use and the tracking worked quite well. I think compared to NTT Docomo, the haptic feedback was a bit lacking, though I would like to have another round of testing, as the demo was over quite fast and jumped from interaction to interaction quite fast without the opportunity to actually feel and compare. Still, a big shoutout the Dheeraj Adnani for great presentation, I’d be happy to continue discussions on collaboration in the future.

NTT Docomo Feel Tech

SenseGlove, a haptic feedback controller for XR solutions

Going between the booths of NTT and SenseGlove also gave a nice picture of the variety on offer at MWC. From the huge booths of the big players to the tiny tables in the startup areas. And both sides offering just as interesting ideas.

Hall 8 full of people meeting all of the startups

One of the more futuristic demos at MWC was e&’s human robot, that you could discuss with. The robot’s hand movement and facial expressions made it feel very natural, and the LLM powered ability to discuss with visitors brought huge crowds around the robot every time I was passing by. Time to replace to butler at our office with one of these.

Another robotic friend I found was Eurecat’s caretaker robot, designed in the Never Home Alone -project. The idea of the robot was remotely monitor elderly people’s health by offering cognitive and physical stimulation and improving healthy lifestyle habits. It also prevents loneliness and isolation, an offers support in domestic tasks. The whole NHoA project was very interesting, as the number of senior citizens living at home but requiring assistance and monitoring is growing all the time.

e&’s humanoid robot

Eurecat’s senior assistant robot

Find more of our news in the News section.

Follow us on social media for more posts: Facebook | LinkedIn | Twitter | Instagram